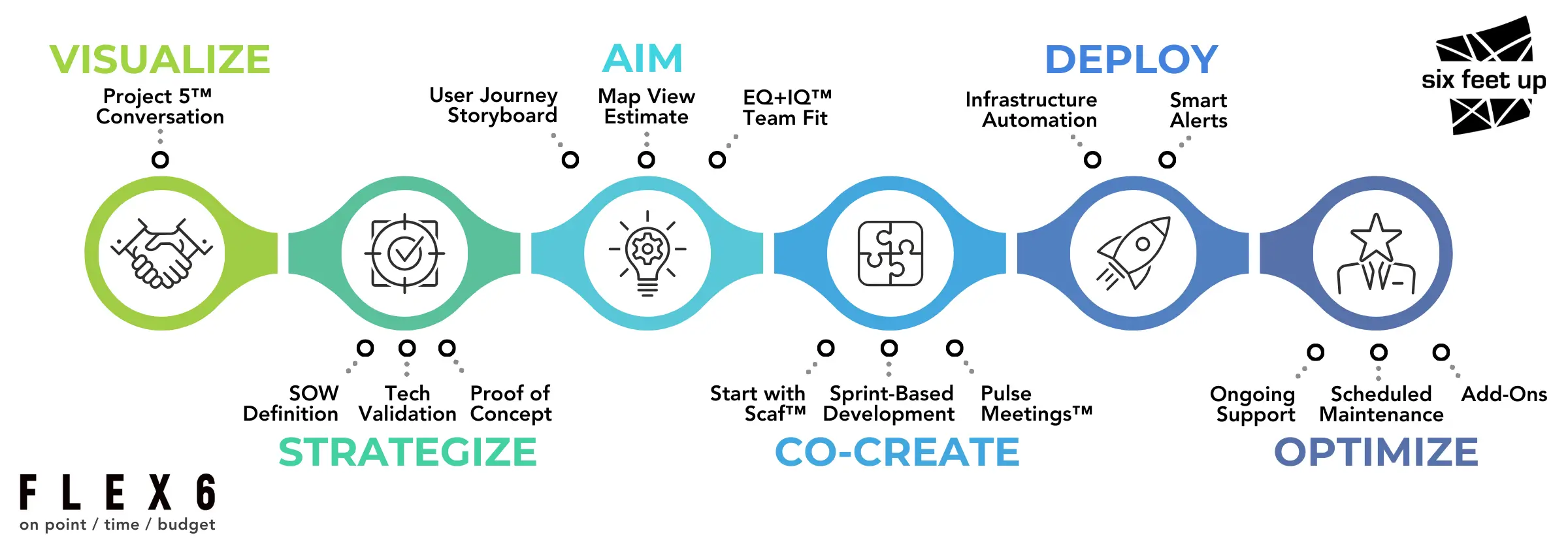

An iterative approach through quick proof-of-concepts will help validate your Big Data innovations faster than a waterfall approach.

Streamline your data pipelines to support real-time decision-making capabilities. Deploy best practices across your Big Data stack.

Prototype Development

Repeatable Deployments

Go from Jupyter Notebooks to Cloud-native containers. Automate the delivery of your pipeline using Continuous Integration/ Continuous Deployment (CI/CD). Prevent drift in your architecture using Infrastructure as Code (IaC) tools like Terraform.

Observability

Improve your ability to troubleshoot issues, and find performance bottlenecks by adding instrumentation in your ETL process. Roll up the data into dashboards for real-time decision making.

Data Pipeline Optimization

Simplify and modernize your data pipeline: move away from batch processing and implement real-time streaming into your data lake.

Technology Expertise

20+ years of software development and deployment experience with a focus on:

- Python / Django / NodeJS

- AWS / GCP / Azure

- Databricks / Airflow

- React / NextJS / Angular

- PostgreSQL

- scikit-learn

- Kubernetes / Terraform

- Linux / FreeBSD

Recent Projects

Unlocking Value from Raw Time Series Signals

Healthcare technology startup

Building a Big Data Pipeline With Cloud Native Tools

A statewide health care system

Latest Blog Posts

Messy data breaks traditional matching — semantic search with embeddings delivers accurate search results from inconsistent inputs.

Learn how we streamlined data integration, minimized costs, and created a scalable process.

As your infrastructure scales up, how you go about managing all DAGs in Airflow becomes very important.