If you are responsible for keeping modern applications reliable and scalable, Kubernetes has probably entered the conversation, often with equal parts excitement and anxiety.

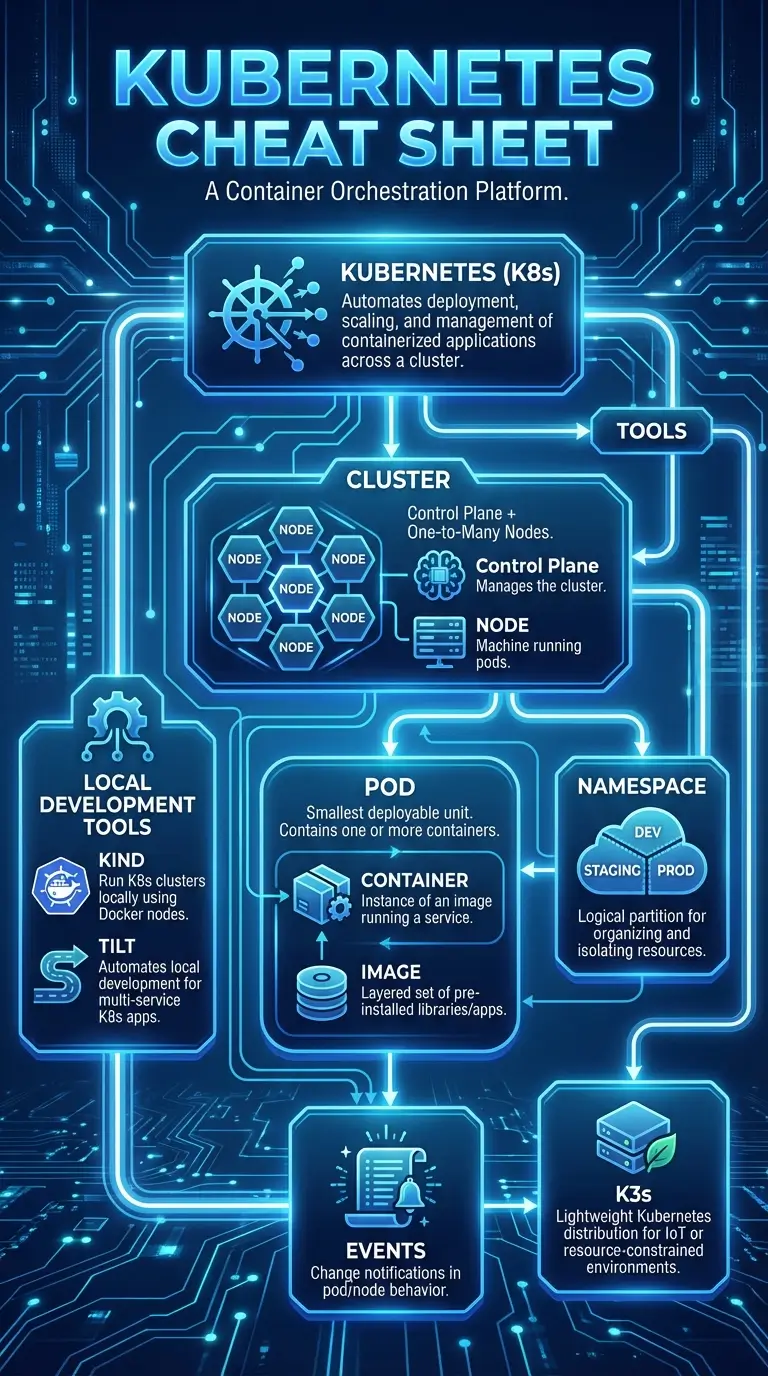

Kubernetes is an orchestration system for running containerized applications. In practical terms, you can describe infrastructure in configuration files and rely on Kubernetes to deploy, scale, and recover services automatically.

Done well, this shifts your organization from heroic releases and fragile servers to a more predictable, platform-driven model.

The following is a guide about what life on Kubernetes looks like. We will skip cluster configuration and YAML details. We will assume you already have a Kubernetes environment or are actively evaluating it as the foundation for your next platform.

How your platform is run and managed changes when you adopt Kubernetes.

Instead of juggling bespoke scripts and one-off servers, you get a single orchestration layer that handles all aspects of running containerized workloads.

As your needs grow, Kubernetes can scale with you. You can start with a small cluster, even a single node (though three nodes is the recommended minimum), and grow to hundreds or thousands of nodes without redesigning everything.

You are not betting on something experimental. Kubernetes runs in production at companies of all sizes and has a large community that shares patterns and answers real-world questions.

Plus, the platform evolves slowly and carefully. Changes are almost always backward compatible, so your teams can focus on applications instead of chasing breaking changes.

Kubernetes is also cloud-agnostic. Because your setup is defined in configuration, it is highly portable between hosting and cloud environments. If you need to move regions or switch providers, your workloads are much easier to relocate. This gives you options when costs, compliance, or strategies change.

You can work in realistic local environments, run the same workflows repeatedly, and debug issues quickly with Tilt, kind, and kubectl.

For development setups, Tilt and kind make it easy to simulate your Kubernetes stack locally. You simply define a Tiltfile, point it at your Dockerfile and Kubernetes config, then run tilt up. It feels similar to a Docker Compose workflow, but built for Kubernetes.

Tilt provides a nice GUI that sits in front of docker and kubectl. It lets you see either a system-wide/combined view or a per-service view, and it watches files/rebuilds images automatically. This is useful for checking configurations and understanding when and why services fail.

kubectl: Your Everyday Control SurfaceBeyond Tilt, your main interface for working with Kubernetes should be kubectl. It's a powerful CLI for managing many aspects of a Kubernetes system. In practice, here are some of the most important commands:

Examine the Running Services

kubectl get pods: This will show you all the running pods. Each pod is based around running the same container image, sometimes across many different nodes.kubectl get pods -n <namespace>: In the event you have more than one namespace that your Kubernetes control plane is managing, you can select a specific namespace to see the list of pods within it.kubectl get pods -n kube-system: When running things with kind, you can actually see the various management services within Kubernetes (e.g., the API server, CoreDNS, control plane, etc.).kubectl get namespaces: Provides a list of all the configured namespaces you can access.Work with a Specific Service/Pod

kubectl logs <pod-name>: Using a pod name from the get pods provides a combined view of the logs from a given pod. It’s very useful for debugging.kubectl describe pod <pod-name>: Again, using a pod name from get pods, you can see configuration details of the pod. For example:: the command running on the container, the state of the pod, the resource limits, etc.kubectl exec -it <pod-id> -- bash: When the logs aren't enough, you can get shell access into an available/running pod to investigate things further. This provides everything you'd expect from a typical bash shell, including all the environment variables that may have been used to configure things.Debug Broader Service/Pod Failures

kubectl get events: This will show a log of any time a pod changed, whether it was being created, scheduled, scaling, dying, etc. This is very helpful when a service/container fails to start/run.kubectl get events -A -w: Similar to the previous example, this gives a combined view of events across all namespaces (the -A) and will continue to watch (the -w) for new events. This is especially useful if you go to manually restart a service, pod, etc.Tips and Tricks

alias k=kubectlalias kgp=kubectl get podsalias kgs=kubectl get servicesFor most developers, these commands and habits cover almost everything you need day-to-day. Beyond that, kubectl supports many additional flags and commands that developers can discover in the official documentation or by using the --help flag on almost any command.

If you are working with multiple Kubernetes systems, you can also create separate kubeconfig files, one per system. This makes it easier to manage complex namespaced environments and to keep staging, testing, and production clusters clearly separated.

From what we have seen at Six Feet Up, Kubernetes is usually the right answer.

Even teams with no prior containerization experience find that after an initial learning period, everyday work becomes straightforward. Most developers rely on a small set of commands and patterns, and Kubernetes fades into the background as a reliable platform.

It quietly schedules workloads, recovers from failures, and keeps environments consistent so teams can focus on delivering features instead of fighting infrastructure.

Whether it is the right choice for you depends on your business needs, not just technical curiosity:

If the answer to those questions is “yes,” then investing in a Kubernetes-based platform with its everyday practices can be a meaningful step toward a more resilient, scalable organization.

To see how these ideas show up in production, explore Six Feet Up’s recent projects involving Kubernetes.